Vision

Article curated by Ginny Smith

Vision is arguably a human's primary sense - we use it to spot danger and navigate our environment. Despite this, there is still plenty we don't know about how this sense works - both in us and in other animals. In the future, this understanding will be vital to create robots that can see and analyse visual information as well as we can.

There is a huge amount we don't know about the human sense of vision. As we seek to develop better and better computational vision we become more aware of some of these unknowns. For example, the ability to recognise a blurry face despite the loss of detailed information suggests we must commonly rely on low resolution data, using our memories to fill in the blanks. We also don't fully understand the way in which our brain 'cancels out' the effects of blinking, eye movements and the fact that only a tiny region of the retina can see in high resolution to provide a seemingly static and clear image of the world.

Learn more about Vision.

2

2 We know that cells in the eye of many animals, from mice to humans, can detect motion - this is essential to avoid predators or track prey. But even this seemingly simple ability isn't fully understood. The retina of the eye is packed with photoreceptor cells - the rods and cones that allow us to detect light. Behind these, there are layers of cells that convert this information into a form that the brain can understand - these include amacrine cells and ganglion cells. Using mice, researchers from Washington University discovered that a subtype of amacrine cells excite the ganglion cells when motion is detected. This information can then be relayed to the brain.

Despite this step forward, Daniel Kerschensteiner - leader of the research team - highlighted just how little we still know about the retina; There are many elements in the retinal circuitry that we haven’t figured out yet. We know the signals from the rods and cones are transmitted to the retina — where the amacrine and ganglion cells are located — and that’s really where the ‘magic’ happens that allows us to see what we see. Unfortunately, we still have a very limited understanding of what most of the cells in the inner retina actually do.

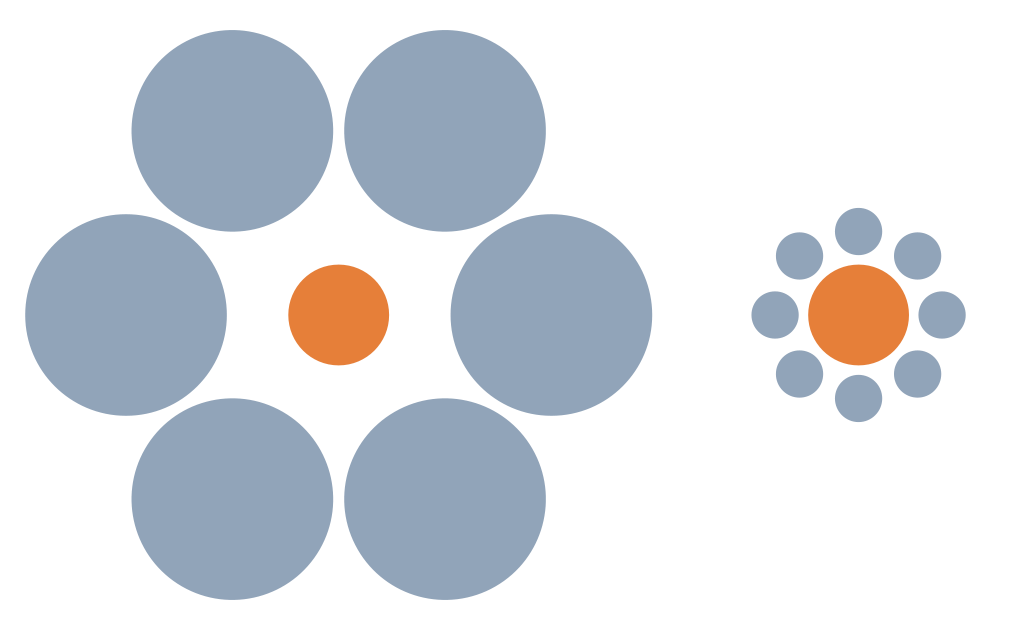

Once the signals from the eye begin their journey to the brain, things become even more complicated. The classical view says there are two streams of visual processing known as the dorsal and ventral or ‘what & where’ streams. The ventral or ‘what’ stream provides us with conscious recognition of the object and its details, but is slow and prone to illusions. The dorsal ‘where’ stream, meanwhile, drives our actions towards an object, often quickly and subconsciously. This idea is supported by studies on patients with damage to areas involved in one stream but not the other, who may, for example, be able to see an object but not use it to guide motion. Some studies have also shown that while we fall for illusions such as the Titchener illusion (see below) when asked about them consciously, we use the actual (rather than illusory) size to drive movements.

However, more recent studies have raised questions about this distinction - showing that if visual tasks and those requiring actions are appropriately matched, there is no different in the size of the illusion seen. The dissociations seen in patients also seem to be less clear cut than originally thought. Other research has supported the idea that these processing pathways are less distinct than first thought, working together as well as separately. Studies in primates suggest there might even be three processing systems - one for shape, one for colour and one for movement, location, and spatial organisation.

New research has also indicated that visual processing might be intimately linked with audio processing. Observations of changes in ear canal pressure, which scientists think is the middle-ear muscles moving the eardrum, have been matched up with eye movements: when our eyes move to an object, so do our ears. Scientists have suggested that both motions are brain-directed, but it’s also possible that the eyes send a signal to the brain that then sends a message to the ears to follow, the opposite, or both in a big feedback loop. It’s thought that syncing the ears and eyes facilitates visual processing, because it helps us identify what is making what sound. However, the discovery is still new and there remains lots to find out about it.

Learn more about Synchronised ears and eyes.

2

2_-_lateral_view.png)

Whatever route it takes, information from the eyes is processed in the visual cortex, located in the occipital lobe at the back of the brain. The primary visual area (V1) is the first part of the cortex to receive information from the eye, which travels via the lateral geniculate nucleus. From here, the areas involved become more complicated and less clear cut. It used to be thought that the information then travelled through various other areas, which processed increasingly more complicated elements of the scene - starting with edges and shapes, then moving on to colour, movement, and areas that respond to attention. However, we now know that rather than a simple hierarchy, these areas connect in complex patterns - feeding back to lower areas as well as forward to higher ones. V1, for example, can be modulated by attention, something previously thought to only be taken into account by V4. There are also disagreements about the exact location and extent of each area, which is bringing into debate whether it is useful to divide the visual cortex up or whether a network approach would be more useful in helping us understand how this complex system works.

Vision Problems

It has long been thought that there is a link between reading or studying and vision problems, but recent research suggests the important factor is not how long children spend staring at something close, but how long they spend outside. The mechanism behind this is as yet unknown, but one idea is that plenty of bright, natural light is protective for the developing eye. Others argue that it is the viewing of landscapes and objects a long way away that has the effect. The mechanism behind either of these theories is yet to be confirmed.

Research is ongoing in the area, to determine whether outdoor time really can prevent myopia developing. Scientists are also looking into alternatives, such as bright, daylight-mimicing lamps, for children in places where natural light is limited or where outdoor play is impossible.

Learn more about Myopia Boom.

Visual Illusions

Instead, some people think it is a version of a perspective illusion - we think the small circles are far away, so the one in the middle must be huge, as it looks so big from such a distance. But more research is needed to figure out exactly what is going on in our brains when we see this illusion.

One theory is that when we view objects like birds or clouds in the sky, those on the horizon are further away than those above us, so we make the same assumption about the moon. Our brain then 'corrects' for the assumed distance of the moon on the horizon, making it seem bigger. This is an example of size constancy. However when asked, few people consciously perceive the horizon moon as being further away, casting doubt on this interpretation.

Another idea is that it is similar to the Titchener illusion - when on the horizon, the moon is surrounded by small objects like trees and houses, making it seem bigger. The large expanses of sky around the moon when it is overhead, however, have the opposite effect. Interestingly, looking at the moon with your head upside-down (e.g. by bending over and looking at it through your legs) reduces the size of the illusion, though no-one knows why!

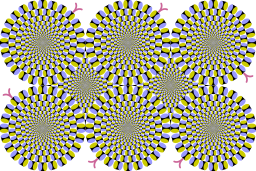

One suggestion for the mechanism is that areas with a greater difference in luminance (black & yellow or blue & white) are processed more quickly by the brain than those with more similar luminance (white & yellow or black & blue). This difference in processing speed could create the illusion of motion. However this mechanism can’t explain other illusions that also produce illusory motion, so for now their mechanism remains a mystery.

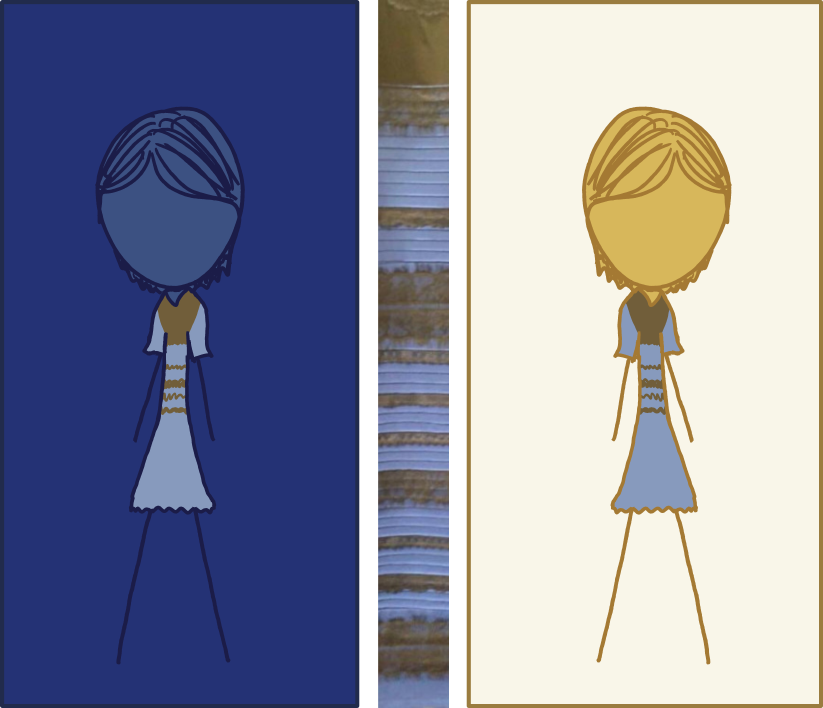

In February 2015, the internet went crazy over a picture that wasn’t of a cat or Kim Kardashian’s bottom. The offending picture was of a bodycon dress with lace trim. But the question that was dividing opinion wasn’t whether it was flattering or suitable for a wedding but what colour it was. Some people looked at the image and saw a blue dress with black detail while others swore blind it was white with gold trim. So what was going on? How can an innocent picture cause such a divide? Scientists were puzzled, and began to investigate.

It was soon confirmed that the dress itself was blue & black, but in the image, the pixels were actually light blue and brown. A study of 1,400 people discovered that 57% saw it as blue and black, 30% saw it as white and gold, 11% saw it as light blue and brown. However Buzzfeed’s poll found that 67% of respondents see it as white & gold, although this difference could be down to that fact that only 2 options were given in the buzzfeed poll. While the exact proportions are unclear, what we do know is that people see things very differently when looking at the image.

Scientists think that the difference is down to what assumption your brain makes about lighting. The picture itself doesn’t make it clear what kind of lighting the dress is under, so you effectively have to guess. If you assume you are viewing the dress in cool daylight, it is likely to look white/gold as you ‘factor out’ the blue tinge as down to lighting; If, however, you assume a warm incandescent light, you will see blue/black. These guesses aren’t conscious, and most people can’t will themselves to see the opposite colour-way, but scientists discovered that they could change what colour people saw by giving them cues as to the kind of lighting involved.

Which of these camps you fall into could be down to a number of things. One suggestion is that ‘Larks’ who wake early and spend more waking hours in daylight, are more likely to assume that is the kind of lighting they are seeing the dress under. This fits with the study’s finding that women & older people (who are more likely to be larks) saw the dress as white & gold more often. However others think it might be down to the yellowing of the corneas that happens with age, and some experts argue that neither of these suggestions fits, and there must be some other, as yet unknown factor involved.

So what made this particular image of the dress so confusing for our poor brains? It seems it was photographed bathed in both natural light from a nearby window and artificial light in the shop. The best explanation is that this means your brain received ambiguous cues, so had to rely on past experience. Which experience your brain picks affects which colour combination you see. However this is far from being a definitive explanation - more research is needed to discover if this is actually what is going on.

Animal Vision

There are many aspects of animal vision we don't understand, and scientists hope that improving our knowledge might be useful in creating artificial vision. One mystery is the reason some birds, like chickens, bob their heads as they walk. To find out more, researchers put pigeons on a treadmill, and found that the bobbing stops if their surroundings are stable. This suggests the birds are using the head movements to keep the appearance of a still world as they move - something we do via tiny, unconscious eye movements. The bobbing means that the bird's head only moves in short bursts (which are easy for the brain to cancel out), and is then still as the body moves to catch up. This is similar to the technique of 'spotting' used by dancers - the head is left behind as they start a pirouette, and then snapped round at the last minute, so it is still for as long as possible.

This much is well supported by evidence, but the question remains - why do birds use this method of keeping the whole head still, when we, and other animals, instead cancel out movement with just our eyes?

Learn more about Bird Head Bobbing.

2

2

Sequencing the photoreceptor proteins revealed wobbegongs (a group of shark species) only have one kind of colour detecting cone cell. However, other researchers have observed sharks being attracted or repulsed by certain colours. For example, Gruber trained a lemon shark to select coloured targets from a mixture of ones of the same brightness and has identified the presence of colour-sensitive cones in the eyes of several shark species. The evidence is conflicting, and fundamentally, nobody knows whether or not sharks can see in colour.

Learn more about Whether sharks see colour.

2

2Computer Vision

Researchers have attempted to replicate the muscle motion of the human eye using piezoelectric ceramics which change in size slightly when electricity is passed through them. This allows them to more closely imitate the movements of the human eye which will be useful for research studies on human eye movement as well as for making video feeds from robots more intuitive.

This article was written by the Things We Don’t Know editorial team, with contributions from Ginny Smith, Johanna Blee, Rowena Fletcher-Wood, Joshua Fleming, and Holly Godwin.

This article was first published on 2017-04-19 and was last updated on 2018-01-27.

References

why don’t all references have links?

Tahnbee, K., Florentina, S., Kerschensteiner, D., (2015) An excitatory amacrine cell detects object motion and provides feature-selective input to ganglion cells in the mouse retina eLife 4 DOI: 10.7554/eLife.08025

Lafer-Sousa, R., Hermann, K,L., Conway, B,R., (2015) Striking individual differences in color perception uncovered by ‘the dress’ photograph Current Biology 25(13):R545-R546 DOI: 10.1016/j.cub.2015.04.053

Schultz, J. and Ueda, J., (2013) Nested Piezoelectric Cellular Actuators for a Biologically Inspired Camera Positioning Mechanism IEEE 29.5:1125 - 1138 DOI: 10.1109/TRO.2013.2264863

Recent vision News

Get customised news updates on your homepage by subscribing to articles