Artificial intelligence

Article curated by Holly Godwin

Leaps and bounds are being taken in the field of artificial intelligence, but will they benefit humanity? Are we capable of designing superintelligent beings and, if so, is our curiosity with furthering technology going to overtake our understanding of the consequences that could result from superintelligence?

What is intelligence? If we’re to understand artificial intelligence, we first have to understand what we mean by “intelligence” at all – and it turns out that this is by no means a straightforward concept. Researchers trying to define intelligence in animals have had a go at defining it and setting parameters, although criteria are often inconsistent animal to animal and study to study. Some intelligence criteria include - tool use, tool making, and tool adaption - discrimination and comprehension of symbols and signs, alone or in sequences - ability to anticipate and plan for an event - play - understanding how head/eye movements relate to attention - ability to form complex social relationships - empathy - personality - recognising yourself in a mirror In other studies, intelligence is often defined as the ability to perform multiple cognitive tasks, rather than any one. We’re still not sure what the best intelligence criteria are, nor how to measure and weight it. In humans, factors like education and socioeconomic status affect performance, so measures are not necessarily a measure of “raw intelligence”, but also training: in fact, people are getting better and better at IQ tests as we learn how to perform them. We also don’t know how widely intelligence varies individual to individual, and therefore how wide a test sample is required to understand the breadth and depth of abilities. Differences in test scores can also reflect better level of motivation rather than ability, a measure which is not facile to standardise.

2

2The Artificial Intelligence Revolution

Nowadays, we use machines to assist us with our everyday lives, from kettles to laptops, but technology is advancing at such a rate that machines may soon be more capable than us at all our day to day tasks. Household robots, once the domain of science fiction, are already becoming a reality.

Some argue that this is an exciting advancement for human society, while others argue that it may have many negative connotations that we need to consider. Whichever, it seems unlikely that further artificial intelligence will not be pursued, and that our scientific drive to advance will not outweigh our concerns over AI.

What if machines are created that can exceed our capabilities? Having machines to independently carry out menial tasks such as cooking and cleaning is one thing, but “superintelligence” is defined as an intellect that is more capable than the best human brains in practically every field, including scientific creativity, general wisdom and social skills

. So, we must ask… is superintelligence possible, and is this definition accurate?

A lot has changed in our understanding of artificial intelligence and, in fact, intelligence itself. For example, we used to think that some tasks (e.g. image recognition, driving) required general intelligence – the ability to be smart across many domains and adapt intelligence to new circumstances. It turns out that this (and many other tasks) can be solved with narrow specialised intelligence. Indeed, we don’t have any good examples of general artificial intelligence at all.

For now, however, there are still discrepancies between the abilities of machines and humans. While machines can be more efficient in some domains, such as mathematical processing, humans are far better equipped in many others, such as facial recognition.

Can Artificial Intelligence Learn?

If artificial intelligence had the capacity to learn, a significant constraint on its abilities would be lifted, and a huge step towards superintelligence would have been made.

Recent research at Google was carried out to assess how efficient artificial intelligence was at recognising images and sound[1]. The machines in question are programmed with artificial neural networks, which allow them to ‘learn’. These artificial networks are statistical learning models, based on the neural networks found in the human brain.

An individual network consists of 10-30 stacked neurons. These neurons then collaborate such that when an input stimulus is detected, such as an image, the first layer will communicate with the subsequent layer and so on until the final layer is reached. This final layer can then decide and produce a particular output, which serves as its ‘answer’, allowing us to assess how well the network recognised the input image.

Understanding how each layer analyses the image differently can be challenging, but we do know that each layer focusses on a progressively abstract features of the image. For example, one of the earlier layers may focus on edges while a later layer may focus on fine lines of detail. This is very similar to the process carried out in our brains, we break down images into features, shape, colour etc. to understand them before recombining them into a whole image.

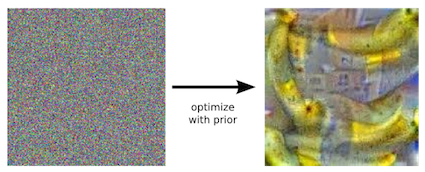

When trying to visualise how this systematic recognition worked, it was decided that the reverse process should be observed. Starting with white noise, the neural networks were then asked to output an image of bananas based on their interpretation. Some constraints on the statistics were put in place in order to make sense of the output, but the interpretation was definitely recognisable as bananas, bananas as viewed through a kaleidoscope admittedly, but still bananas. This demonstrated that neurons designed to discriminate between images had acquired a lot of the information necessary to generate the images. This ability of the artificial networks has been dubbed as ‘dreaming’ due to the slightly surreal effect produced.

While this process is defined by well known mathematical models, we understand little of why it works, and why other models don’t.

The problems with AI: Emotions

Is a being with no capacity for emotions capable of more intelligent thought than one who is? Are emotions purely obstacles to reason, or is intelligent thought the product of an interaction between emotion and reason?[2]

For the true meaning of superintelligence to be understood, we would first need to understand whether or not emotion was key to intelligence.

Decision-making and emotions are intricately linked. When facing difficult decisions with conflicting choices, often people are overwhelmed, and their cognitive processes alone are no longer enough to define the better choice. In order to come to a conclusion in these situations, it has been proposed by the neuropsychologist Antonio Damasio that we rely on ‘somatic markers’ to guide our decisions[3]. Before we can understand this hypothesis, we must first take a closer look at what we mean by emotions.

While we label emotions as happy, sad, angry etc., these are just primary emotions, broadly experienced across cultures. Secondary emotions are more evolved – they are influenced by previous experiences and can be based on the specific culture you are immersed in, and so are manifested differently by individuals. Somatic markers are the physical changes in the body that occur when we have an emotion associated with a thing or event from a previous experience or cultural reference; they are, in essence, a physical product of a secondary emotion that lets the brain know we have an emotion associated with the choices we face. This, in turn, gives us some context that makes the decision easier to deal with.

We cannot say conclusively that emotions are key in difficult decisions. However if, after further research, the somatic markers hypothesis gathers the necessary supporting evidence, this would shed some light on the benefit of emotions both to human beings and possibly to artificial intelligence. It is also possible that while emotions may help in difficult decisions, they are not beneficial to our intelligence in other situations.

2

2There has been much debate over whether programming a machine to experience emotions would be possible. While the gap between reality and science fiction still seems vast, there has been a lot of progress in this area. Physiological changes such as facial expressions, body language and sweating are the cues that allow us to read emotions. Computers can now simulate facial expressions when inputted with a certain emotion – there has even been a three dimensional model of a face, ‘Kismet’, that can display expressions relating to emotions[4]. However, its important to remember that these are just simulations of feelings, not ‘real’ emotions.

Are advances in this area going to merely produce better fakes, improvements on current simulations, or can we program a machine to feel? To achieve this, we surely have to explore what it is to truly feel an emotion, rather than simply display its characteristics. To feel relies on consciousness, so a machine would need to be independently responsive to its surroundings before it could be said to experience emotions. This would require further studies on the human mind. Is there something that cannot be recreated by complex electrical signals? Something that cannot be mathematically defined?

Before we can recreate the essence of consciousness in a machine, further studies would need to be carried out into the workings of our own minds. Is there something key in the human mind that cannot be recreated by complex electrical signals? Something that cannot be mathematically defined?

Whether it is achievable or not, maybe we should ask ourselves whether man made consciousness would be a good thing?

Learn more about Programming machines with emotions.

2

2

The problems with AI: Morality and consequences

When faced with difficult moral dilemmas, it’s debatable whether it’s even beneficial to link an emotion to an outcome. It has been suggested by Eliezer Yudkowski, member of The Machine Intelligence Institute (MIRI), that a mathematically defined ‘universal code of ethics’ would need to be devised in order to implement morality upon a manmade being[5], but can a universal code of ethics exist, and if it does, is it too complex to define?

Would a machine with no concept of right or wrong have a positive or negative effect on society?

An example of the potential for destructive behaviour resulting from a lack of morality is ‘tiling the universe’[2]; imagine a robot has been given the task of printing £5 notes; it could attempt to tile the entire universe with £5 notes, under the impression it was carrying out its given task. £5 notes would become valueless, and the AI's aggressive printing pursuit terrorism. The role of a code of ethics in this could prevent the situation from arising, meaning instructions wouldn’t have to be so carefully defined, and the robot could be spoken to like a human employee.

Conversely, what if a universally defined code of ethics is equally if not more harmful than having no code at all? Can morality, with so many grey areas, truly be defined, or would you end up having a situation where robots act for the greater good as an attempt to find a simple defining factor to link their desired behaviour?

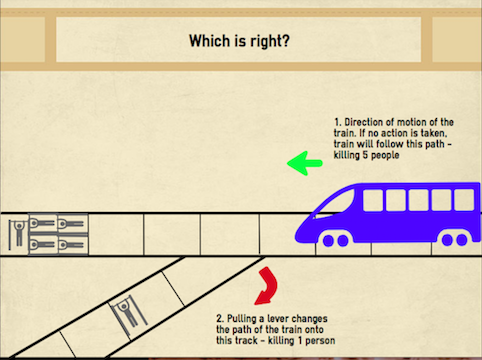

You could argue that acting for the cause of the greater good can never be a bad thing, and is working towards situations where the fewest people are ill-affected. Take, for example, the problem of the runaway train; five people are tied to the tracks ahead of you, you can either do nothing and they get run over, or choose to redirect the train to another track where just one person is tied down. The philosopher Kant argued that you can never treat someone as a means to an end: this is known as the categorical imperative[6], i.e. making the choice to kill the one man is worse than doing nothing and killing five. However, many people, when faced with this problem, will say they would take a utilitarian standpoint. Arguing that doing nothing and killing the five is, in a sense, just as much of an action as deciding to change the path of the train and killing the one, they would redirect the train so fewer people died – i.e. the means justifies the end.

Using another theoretical situation, however, alters the responses dramatically: imagine a hospital ward where five sickly patients are in need of different transplants. Without them they will die. Now if your sole motive was to act for the greater good, you should kill a healthy passer-by and use their organs to cure the patients. You have in essence killed one person in order to let five live – an act that works in favour of the greater good and is comparable to the runaway train analogy. At this point, many who previously believed you should act for the greater good change their mind and take the stance that you shouldn’t take a life. A bold code of ethics would clearly face many problems. It is likely that one code could never be agreed upon. However, if we could not, or did not want to, program machines with emotions or morality, could they ever rival us or be considered superintelligent?

So from this we can already see that a bold code of ethics would face many problems and a lot of criticism, illustrating that developing such a code with be a massive undertaking as there would be so many cases and exceptions. It is likely that it could never be agreed upon. However if we could not, or did not want to, program machines with emotions or morality, could they ever rival us or be considered superintelligent?

Another problem arises when we consider the dispersion of these artificial beings. Presumably they would have some cost, meaning they would only be available to those who could afford them. What could this mean for social equality and warfare?[2]. Additionally, should questions of ownership even be considered? If these beings rival, if not outdo, our own intelligence, should we have the right to claim ownership over them? Or is this merely enslavement?

The Artificial Intelligence ‘Explosion’!

If superintelligent machines are an achievable possibility, they could act as a catalyst for an ‘AI explosion’[2].

A superintelligent machine would have a greater general intelligence than a human being (not purely domain intelligence) and would hence be capable of building machines, equally or more intelligent than ourselves. This could be the beginning of a chain reaction, with the superintelligent machines’ efficiency, it could be a matter of months or days or even minutes until the ‘AI explosion’ had occurred - a world overrun with robots. It is a plausible argument that this would be the point at which we could lose control over machines.

The questions related to this field are numerous. It is a topic at the frontier of science that is developing rapidly, and the once futuristic fiction is now looking more and more possible. While the human race always has a drive to pursue knowledge, in a situation with so many possible outcomes, maybe we should exercise caution.

This article was supported with advice and information from Dr Stuart Armstrong, Future of Humanity Institute, University of Oxford.

This article was written by the Things We Don’t Know editorial team, with contributions from Ginny Smith, Johanna Blee, Rowena Fletcher-Wood, and Holly Godwin.

This article was first published on 2015-08-27 and was last updated on 2019-07-31.

References

why don’t all references have links?

[1] Mordvintsev, A., Olah, C., Tyka, M. ‘Inceptionism: Going Deeper into Neural Networks’ Google Research Blog, 2015.

[2] Evans, D. ‘The AI Swindle’ SCL Technology Law Futures Conference, 2015. (with further information from following interview).

[3] Damasio, A., Everitt, B., Bishop, D. ‘The Somatic Marker Hypothesis and the Possible Functions of the Prefrontal Cortex’ Royal Society, 1996.

[4] Evans, D. ‘Can robots have emotions?’ School of Informatics Paper, University of Edinburgh, 2004.

[5] Bostrom, N., Yudkowsky, E. ‘The Ethics of Artificial Intelligence’ Cambridge Handbook of Artificial Intelligence, Cambridge University Press, 2011.

[6] Kant, I. ‘Grounding for the Metaphysics of Morals’ (1785) Hackett, 3rd ed. p.3-, 1993.

Recent artificial intelligence News

Get customised news updates on your homepage by subscribing to articles